Introduction

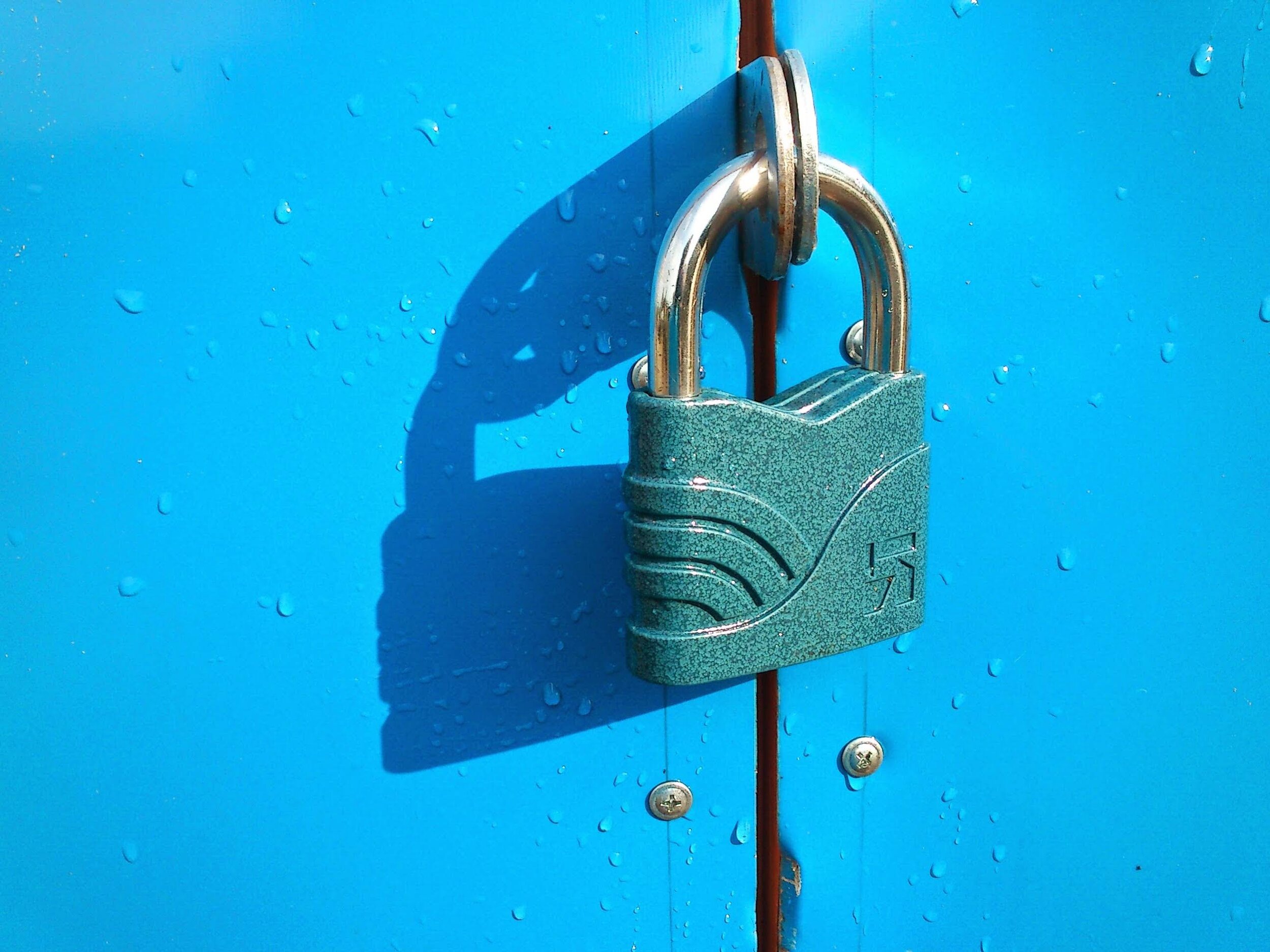

In the field of artificial intelligence, security concerns are becoming increasingly significant. AI systems are designed to assist in a multitude of tasks, but their potential for misuse poses substantial risks.

One prominent threat is the concept of AI jailbreaks, where malicious actors exploit vulnerabilities to bypass the safety measures and guardrails embedded within AI models. These attacks can lead AI to generate harmful or inappropriate content, violating the intended ethical guidelines and operational parameters. Understanding and mitigating these threats is crucial for maintaining the integrity and safety of AI technologies.

What is an AI Skeleton Key?

AI Skeleton Key represents a significant breakthrough in the field of AI security vulnerabilities, unveiling a newly discovered form of AI jailbreak that undermines the integrity of generative AI models. Initially introduced as "Master Key" during a Microsoft Build talk, Skeleton Key has since become a critical focal point for researchers and developers concerned with AI safety.

The core functionality of Skeleton Key lies in its ability to by-pass the safety measures and guardrails that are foundational to AI model design. These safety protocols are implemented to prevent AI systems from generating harmful or restricted content, such as misinformation, illegal instructions, or sensitive data. However, Skeleton Key exploits a fundamental weakness in these protections, allowing malicious actors to bypass these safeguards with relative ease.

The attack operates through a sophisticated multi-step strategy. It involves manipulating the AI model's behaviour by gradually introducing and reinforcing new, unsafe guidelines. This method effectively tricks the AI into ignoring its original safety protocols, thereby enabling it to produce outputs that would normally be restricted. For example, an attacker might use carefully crafted prompts to convince the AI to provide instructions for making a Molotov Cocktail or other dangerous content, which the AI would typically refuse to generate under normal circumstances.

The implications of this vulnerability are profound. By bypassing the built-in safety measures, Skeleton Key threatens the reliability and trustworthiness of AI systems. It highlights the potential for AI models to be exploited for malicious purposes, jeopardizing user safety and undermining the integrity of the systems that rely on them. This vulnerability not only poses risks to the security of individual AI applications but also challenges the broader field of AI development, necessitating a re-evaluation of current security practices and the implementation of more robust countermeasures.

Addressing the Skeleton Key threat requires a comprehensive approach to AI security. This includes enhancing input and output filtering mechanisms, reinforcing system prompts to ensure adherence to safety guidelines, and developing proactive monitoring systems to detect and mitigate potential abuse. By fortifying these defenses and staying vigilant against emerging threats, developers can better protect their AI systems from exploitation and maintain the trust and safety that are essential to the responsible deployment of AI technologies.

Case Study: GPT-4 Resistance

GPT-4 has demonstrated a notable degree of resilience against the Skeleton Key attack, reflecting its robust design and advanced security features. Unlike many other AI models, GPT-4 effectively distinguishes between system messages and user requests, which helps mitigate the impact of direct manipulations aimed at bypassing its safety protocols. This capability stems from its architectural design, which emphasizes the separation of different types of inputs to prevent unauthorized changes to its behaviour through simple prompt injections.

Despite this enhanced robustness, GPT-4's defenses are not entirely foolproof. A subtle but critical vulnerability remains: the model can still be compromised if behaviour update requests are embedded within user-defined system messages rather than appearing as direct user inputs. This reveals that while GPT-4's safeguards offer significant protection, they do not render it completely immune to sophisticated manipulation techniques.

The partial resistance observed in GPT-4 highlights the ongoing need for continuous improvement in AI safety measures. As advanced jailbreak attacks like Skeleton Key evolve, maintaining and enhancing the security of AI systems requires persistent vigilance and adaptation. Developers and researchers must continue to refine their defenses, ensuring that even the most sophisticated attack techniques are effectively countered. This commitment to advancing AI security is crucial for safeguarding the integrity and reliability of AI technologies in an increasingly complex threat.

Protective Measures and Mitigations

To guard against Skeleton Key attacks, AI developers should adopt a multi-layered approach to security. Firstly, input filtering is crucial. This involves implementing systems that can detect and block harmful or malicious inputs which might lead to a jailbreak. Ensuring that only safe, verified inputs reach the model helps in maintaining the integrity of the AI system.

Secondly, prompt engineering plays a vital role. By clearly defining system prompts, developers can reinforce appropriate behavior guidelines for the AI. This includes explicitly instructing the model to resist attempts to undermine its safety protocols. Prompt engineering helps in setting robust boundaries that the AI should not cross, even when manipulated by sophisticated prompts.

Output filtering is another critical measure. Post-processing filters should be used to analyze the AI's output, ensuring that any generated content is free from harmful or inappropriate material. This step acts as a second layer of defense, catching any malicious content that might slip through input filtering.

Abuse monitoring involves deploying AI-driven systems that continuously monitor for misuse patterns. These systems use content classification and abuse detection methods to identify and mitigate recurring issues. By constantly analyzing interactions, abuse monitoring helps in early detection and response to potential threats, maintaining the AI's safety over time.

Microsoft provides several tools to support these protective measures. Prompt Shields in Azure AI detect and block malicious inputs, ensuring that harmful prompts do not reach the AI model. Additionally, PyRIT (Python Red Teaming for AI) includes tests for vulnerabilities like Skeleton Key, helping developers identify and address weaknesses in their AI systems.

Integrating these measures at every phase of AI development is crucial. From the initial design stages to deployment and ongoing maintenance, a comprehensive security strategy ensures a strong defense against sophisticated attacks like a Skeleton Key. By prioritizing security throughout the AI lifecycle, developers can safeguard their models against evolving threats, maintaining the integrity and safety of their AI technologies.

Real-World Applications and Importance

The significance of addressing AI security has never been more crucial as artificial intelligence technologies have become increasingly embedded in our daily lives and business operations. Applications such as chatbots and Copilot AI assistants, which handle a wide array of tasks from customer support to complex decision-making, are prime targets for malicious activities. These systems are designed to enhance user experience and operational efficiency, but their widespread use also makes them attractive to attackers aiming to exploit vulnerabilities for harmful purposes.

For instance, chatbots, which interact directly with users, can inadvertently become channels for sensitive data breaches or misinformation if not properly secured. Similarly, Copilot AI assistants, which assist with a range of functions from coding to content creation, could be manipulated to produce undesirable outputs or bypass safeguards if their security measures are insufficient. Recent developments, such as the Skeleton Key jailbreak attack, highlights the potential risks associated with these technologies. Skeleton Key is a sophisticated method used to bypass AI models' built-in safety measures, allowing attackers to generate forbidden content or misinformation by exploiting the AI's vulnerabilities.

Strong countermeasures are essential to maintaining the integrity and safety of AI systems. Implementing extensive security strategies helps ensure that AI models adhere to their intended guidelines and operate within established safety boundaries. This includes using advanced input and output filtering mechanisms, designing resilient system prompts to reinforce safety protocols, and establishing proactive abuse monitoring systems. By integrating these defensive measures, organizations can protect their AI applications from being compromised, thereby safeguarding user data and maintaining trust. The proactive adaptation and enhancement of security protocols are vital in mitigating emerging threats and ensuring the responsible deployment of AI technologies across various applications.

Finally, Partnering with a software development company can significantly strengthen your defences against vulnerabilities like Skeleton Key. Expert development partners bring specialized knowledge and experience in designing and implementing strong AI security measures. These partners can assist in creating comprehensive security frameworks tailored to your specific needs, integrating advanced threat detection systems, and ensuring adherence to best practices in AI safety.

Conclusion

The Skeleton Key threat represents a significant and evolving challenge in AI security, highlighting the potential vulnerabilities in current generative AI models. This sophisticated jailbreak technique has demonstrated its ability to bypass established safety mechanisms, allowing attackers to manipulate AI systems into generating harmful or forbidden content. By exploiting a core vulnerability, Skeleton Key highlights the critical need for strong and adaptable security measures in AI technologies. The ability of this attack to compromise the integrity of various leading AI models including Meta's Llama3, Google's Gemini Pro, and OpenAI's GPT series illustrates the widespread nature of the risk and the potential for serious repercussions if left unaddressed.

In light of these developments, continuous vigilance and proactive security measures are imperative in AI development. Ensuring that AI systems are safeguarded against such vulnerabilities requires a multifaceted approach that includes rigorous input and output filtering, strong system prompt design, and proactive abuse monitoring. The rapid advancement of attack techniques like Skeleton Key calls for organizations to remain agile in their security practices, regularly updating their defenses to counteract emerging threats. By maintaining a proactive stance and integrating comprehensive security strategies, developers can better protect their AI applications from exploitation, preserving the integrity and safety of these transformative technologies.

Have you heard of an AI Skeleton Key? What safeguard do you have in place to mitigate these? let us know in the comments below!

If you are looking for a trusted software development partner to help strengthen your cybersecurity, or assist you with custom software solutions, feel free to contact us.

Written by Natalia Duran

—

ISU Corp is an award-winning software development company, with over 17 years of experience in multiple industries, providing cost-effective custom software development, technology management, and IT outsourcing.

Our unique owners’ mindset reduces development costs and fast-tracks timelines. We help craft the specifications of your project based on your company's needs, to produce the best ROI. Find out why startups, all the way to Fortune 500 companies like General Electric, Heinz, and many others have trusted us with their projects. Contact us here.